Speech XAI, explaining reasons behind speech model predictions

Published:

Status: Available ✅

from The AI Summer

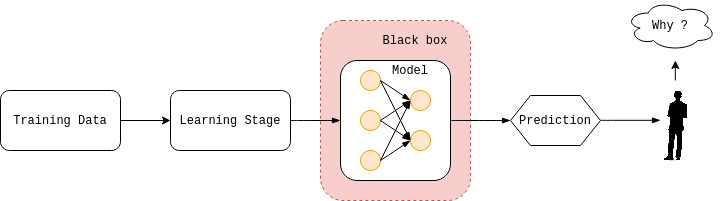

Speech XAI focuses on providing insights into the reasons behind predictions made by speech models. This emerging field aims to enhance transparency and interpretability in speech recognition and synthesis systems. By employing various techniques such as attention mechanisms, saliency maps, and feature importance analysis, Speech XAI enables users to understand why a particular prediction was made. This empowers users to gain insights into the underlying decision-making processes of speech models, fostering trust, accountability, and enabling targeted improvements to ensure more accurate and reliable speech-based applications.

The main objectives of this thesis are:

- Analyze the state-of-the-art XAI techniques.

- Design a novel pipeline to analyze and debug speech models and their predictions.

- Demonstrate the effectiveness of the proposed approach using renowned benchmarks (e.g., SUPERB).

References: